Chapter 4 Transparency and Explainability

Artificial Intelligence (AI) is increasingly used for decisions that affect our daily lives, such as loan-worthiness, credit scoring, education (admission and success prediction, educator performance, etc.), emergency response, medical diagnosis, job candidate selection, parole determination, predicting recidivism, criminal punishment. Algorithms are also used to recommend a movie to watch, a person to date, or an apartment to rent. With AI being used in more and more decision-making scenarios where people’s lives or livelihood are at stake, transparency into understanding what decision the machine is making and their impact, and how the machine made the decisions is going to become more and more important.

In this chapter, we start with the following guest lecture on the call of transparency in AI.

Guest Lecture: The need for transparency in Artificial Intelligence [WSAI 2018]

Dr. Evert Haasdijk, one of Deloitte’s Artificial Intelligence-experts, was key note speaker at the recently held World Summit Artificial Intelligence in Amsterdam in 2018. Theme of his speech was the need for transparency in Artificial Intelligence:

Transparency in AI is closely linked with the principle of explicability and encompasses transparency of elements relevant to an AI system: the data, the system and the business models (main?).

According to the “Ethics guidelines for trustworthy AI” (ec2019ethics?), the ethical principle in the context of AI systems that directly relates to Transparency is the principle of explicability:

The principle of explicability

Explicability is crucial for building and maintaining users’ trust in AI systems. This means that processes need to be transparent, the capabilities and purpose of AI systems openly communicated, and decisions – to the extent possible – explainable to those directly and indirectly affected. Without such information, a decision cannot be duly contested. An explanation as to why a model has generated a particular output or decision (and what combination of input factors contributed to that) is not always possible. These cases are referred to as ‘black box’ algorithms and require special attention. In those circumstances, other explicability measures (e.g. traceability, auditability and transparent communication on system capabilities) may be required, provided that the system as a whole respects fundamental rights. The degree to which explicability is needed is highly dependent on the context and the severity of the consequences if that output is erroneous or otherwise inaccurate.

In this chapter, we will learn about the importance of open communication and explanation on the AI decisions and their impact. We will also explore the existing techniques to improve explanability and interpretability of AI systems.

4.1 Inscrutable autonomous decisions

As discussed before, AI systems are being increasingly used in many sectors, ranging from health and education to e-commerce and criminal investigation. Typical AI systems heavily rely on real-world data to infer any relations or conclusions, and therefore, they susceptible to bias that stems from the data itself (attribute or labels are biased) or from systemic social biases that generated the data (e.g. recidivism, arrests). As a result, AI models that are learned from real-world data can become unethical if their outputs discriminate, albeit unintentionally, against a certain group of people.

While building ethical and fair models seems like the ultimate and ideal goal, the minimum and urgent criterion, that AI models should satisfy, is transparency, and this could be the first step in the direction toward fair and ethical AI. Transparency in this sense helps to answer a few critical questions that keep coming up in these areas. For example, how are the decisions being made? What factors/variables did the AI model look at and what weighting did it give these factors/variables? How comprehensive were the training cases that the AI model was built on for this decision making? Designing explainable AI systems that could convey the reasoning behind the produced results, is of great importance.

4.1.1 Case study: Nvidia’s self-driving car

The following case study is extracted from The Dark Secret at the Heart of AI

Nvidia’s self-driving car

In late 2016, a strange self-driving car was released onto the quiet roads of Monmouth County, New Jersey. The experimental vehicle, developed by researchers at the chip maker Nvidia, didn’t look different from other autonomous cars, but it was unlike anything demonstrated by Google, Tesla, or General Motors. It showed the rising power of AI. The car didn’t follow a single instruction provided by an engineer or programmer. Instead, it relied entirely on a Deep learning algorithm that had taught itself to drive by watching a human do it.

Watch the following video on learn about the AI power behind this model of autonomous driving car:

DISCUSSION

- Why do people concern about this powerful technique?

- What are the potential ethical issues with the design?

_ Who would be held accountable for the ethical implications of the inscrutable algorithms they develop

4.1.2 Case study: Public teacher employment evaluations

The following case study is extracted from Chapter 4.2.1 (main?)

HOUSTON (CN)

A proprietary AI system was used by the Houston school district to assess the performance of their teaching staffs. The system used student test scores over time to assess the teachers’ impact. The results were then used to dismiss teachers deemed ineffective by the system.

The teacher’s union challenged the use of the AI system in court. As the algorithms used to assess the teacher’s performance were considered proprietary information by the owners of the software, they could not be scrutinized by humans. This inscrutability was deemed a potential violation of the teachers’ civil rights, and the case was settled with the school district withdrawing the use of the system.

Read the following article to investigate more on the story of EVAAS:

4.2 Policing and prediction

In recent years, an increasing number of police forces around the world have adopted software that uses statistical data to guide their decision-making: predictive policing. Predictive policing refers to the usage of mathematical, predictive analytics, and other analytical techniques in law enforcement to identify potential criminal activity - wiki. Predictive policing does not replace conventional policing methods, but enhances these traditional practices by applying advanced statistical models and algorithms. Although many police departments are convinced of a bright future for predictive policing, concerns have also been raised regarding the usage of AI algorithms to forecast criminal behavior.

4.2.1 Case study: Predictive policing in the US

The following case study is extracted from Chapter 5.4.1 (main?)

Predictive policing in the US

When data analysis company Palantir partnered up with the New Orleans police department, it began to assemble a database of local individuals to use as a basis for predictive policing. To do this it uses information gathered from social media profiles, known associates, license plates, phone numbers, nicknames, weapons, and addresses. Media reports indicated that this database covered around 1% of the population of New Orleans. After it had been in operation for six years there was a flurry of media attention over the “secretive” program. The New Orleans Police Department (NOPD) clarified that the program was not secret and had been discussed at technology conferences. But insufficient publicity meant that even some city council members were unaware of the program.

A “gang member scorecard” became a focus of media coverage, and groups such as the American Civil Liberties Union (ACLU) pointed out that operations like this require more community approval and transparency. The ACLU argued that the data used to fill out these databases could be based on biased practices, such as stop-and-frisk policies that disproportionately target African American males, and this would feed into predictive policing databases. This could mean more African Americans were scanned by the system, creating a feedback loop in which they were more likely to be targeted. This should be a key consideration in any long-term use of an AI program—is the data being collected over time still serving the intended outcome, and what measures ensure that this is being regularly assessed?

The NOPD terminated its agreement with Palantir and some defendants that were identified through the system have raised the use of this technology in their court defense and attempted to subpoena documents relating to the Palantir algorithms from the authorities.

The Los Angeles Police Department (LAPD) still has an agreement with Palantir in relation to its LASER predictive policing system. The LAPD also runs PredPol, a system that predicts crime by area, and suggests locations for police to patrol based on previously reported crimes, with the goal of creating a deterrent effect. These programs have both prompted pushback from local civic organizations, who say that residents are being unfairly spied upon by police because their neighborhoods have been profiled, potentially creating another feedback loop in which people are more likely to be stopped by police because they live in a certain neighborhood, and once they have been stopped by police they are more likely to be stopped again.

In response, local police have invited reporters to see their predictive policing in action, arguing that it also helps communities affected by crime and pointing out that early intervention can save lives and foster positive links between police and entire communities. Some police officers were also cited in media pointing out that there needs to be sufficient public involvement and understanding and acceptance of predictive policing programs in order for them to effectively build those community links.

Watch the following video gives a brief explanations on “How the LAPD Uses Data to Predict Crime”:

Read the following articles investigate more on predictive policing in the US:

4.2.2 Case study: Predictive policing in Brisbane

The following case study is extracted from Chapter 5.4.2 (main?)

Predictive policing in Brisbane

One predictive policing tool has already been modelled to predict crime hotspots in Brisbane. Using 10 years of accumulated crime data, the system used 70% of the data to predict crime, with the researchers seeing if its predictions correlated with the remaining 30%. The results proved more accurate than existing models, with an improvement of 16% accuracy for assaults, 6% more accuracy for predicting unlawful entry, 4% better accuracy for predicting drug offences and theft, and 2% better for fraud. The system can predict long term crime trends, but not short term ones. The Brisbane study used information from location-based app foursquare, and incorporated information from both Brisbane and New York.

Predictive policing tools typically use four broad types of information:

- Historical data, such as the long term crime patterns recorded by police as crime hotspots. Geographical and demographic information, including distances from roads, median values of houses, marriages, the socioeconomic and racial makeup of an area.

- Social media information, such as tweets about a particular location and keywords

- Human mobility information, such as mobile phone usage and check-ins and the associated distribution in the population.

The Brisbane study primarily used human mobility information. Further research is needed, but this study suggests that some types of input information may be more effective at gauging accurate information than others—so careful consideration may warrant emphasizing some types of input information over others, given that they will have varying impacts on privacy and some less intrusive approaches may be less likely to provoke public distrust. Public trust, as case studies from the US demonstrate, is a crucial element of the effectiveness of predictive policing tools.

DISCUSSION

- Through the above case studies in predictive policing, what conclusion we could make?

- What can we learn from these existing applications?

- What are the potential ethical issues that arise with the advent of predictive policing?

- Do you know other stories in other countries?

- What might be the potential impact on the citizens?

- How should we deal with them?

The debate over the use of AI in policing is ongoing, and now it is increasingly being marketed in various countries including the US, AU, UK, etc..

One clear outcome has been that if new technologies are used in law enforcement, there is an essential need for regular processes by which to put these new technologies before elected officials and the communities they serve. These technologies will only work if the community is comfortable with the degree to which this type of information is being applied and if they’re aware of how the information is being used. This transparency is also the core of ensuring they remain ethical and accountable to the communities they protect. Without public endorsement and support, these systems can not effectively serve the police or general public.

4.3 AI in Healthcare and medicine

Health care organizations are leveraging AI techniques, such as machine learning, to improve the delivery of care at a reduced cost. What does AI mean for the future of health care? Watch the following short video from Stanford Medicine to learn about the current state of AI in healthcare and medicine.

The following talk from Greg Corrado, Co-founder of Google Brain and Principal Scientist at Google, has also covered the enormous role that A.I. and machine learning will play in the future of health and medicine, and why doctors and other healthcare professionals must play a central role in that revolution.

4.3.1 Case Study: Predicting coma outcomes

The following case study is extracted from Chapter 5.5.1 (main?)

Predicting coma outcomes

A program in China that analyses the brain activity of coma patients was able to successfully predict seven cases in which the patients went on to recover, despite doctor assessments giving them a much lower chance of recovery. This AI system took examples of previous scans, and was able to detect subtle brain activity and patterns to determine which patients were likely to recover and which were not. One patient was given a score of just seven out of 23 by human doctors, which indicated a low probability of recovery, but the AI system gave him over 20 points. He subsequently recovered. His life may have been saved by the AI system.

If this AI system lives up to its potential, then this kind of tool would be of immense value in saving human lives by spotting previously hidden potential for recovery in coma patients—those given high scores can be kept on life support long enough to recover.

But it prompts the question: what about people with low scores?

Rigorous peer-reviewed research should be conducted before such systems are relied upon to inform clinical decisions. Ongoing monitoring, auditing, and research are also required. Assuming the AI is accurate—and a number of patients with low scores would need to be kept on life support to confirm the accuracy of the system—then the core questions will revolve around resourcing. Families of patients may wish to try for recovery even if the odds of success are very small. It is crucial that decisions in such cases are made among all stakeholders and don’t hinge solely on the results from a machine. If resources permit, then families should have that option.

Read the following article to investigate more on this story:

4.3.2 Case study: AI and health insurance

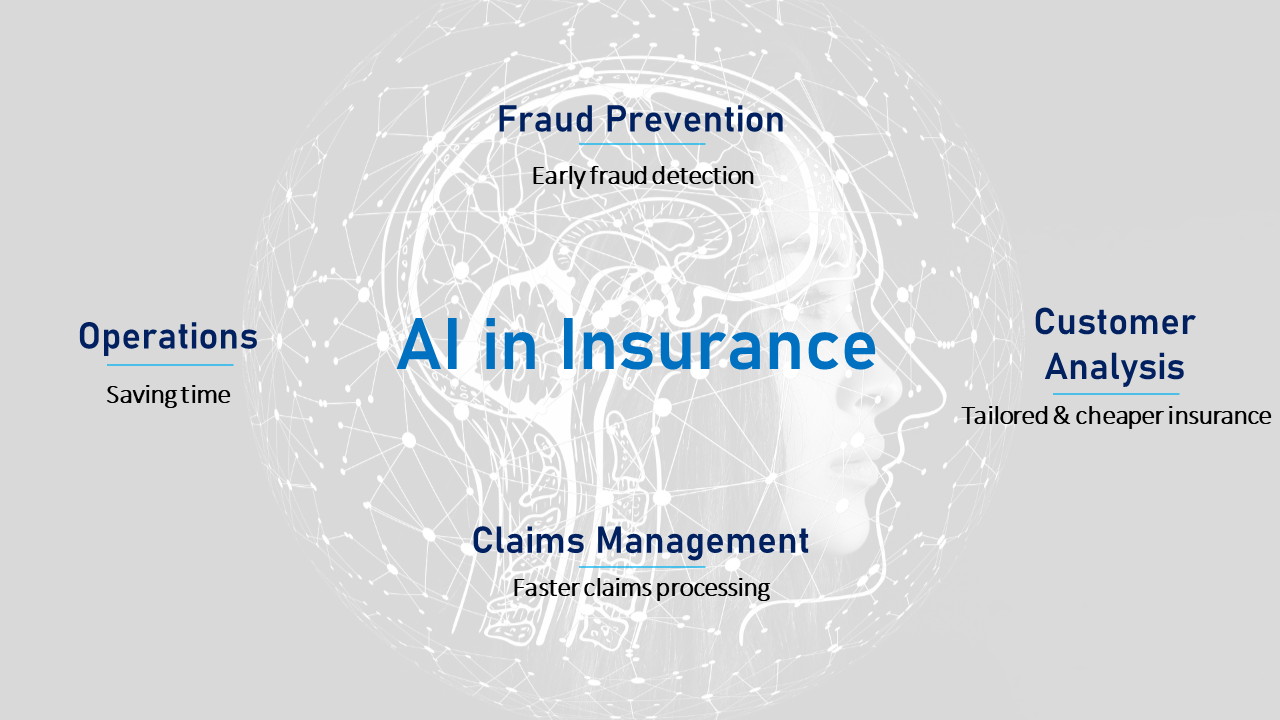

The health insurance industries have also made significant progress in AI implementation. There are many examples of how insurers around the world are implementing AI to improve their bottom line as well as the customer experience. There are also numerous startups that are providing AI solutions for insurers and customers. Following are four common areas where AI can help the Insurance industry:

For example:

- Operations and claims management: AI-enabled Chatbots can be developed to understand and answer the bulk of customer queries over email, chat and phone calls. This can free up significant time and resources for insurers, but could also be beneficial for customers for faster-claiming processing and response.

- Fraud detection: AI-models can be used to recognize fraud patterns and reduce fraudulent claims.

- Customer Analysis: AI techniques can be used to price insurance policies more competitively and relevantly and recommend useful products to customers. Insurers can price products based on individual needs and lifestyle so that customers only pay for the coverage they need. This increases the appeal of insurance to a wider range of customers, some of whom may then purchase insurance for the first time.

If AI can deliver more accurate predictions in areas like healthcare, obviously, this has also for insurance. If an AI can assess someone’s health more accurately than a human physician, then this is an excellent result for people in good health—they can receive lower premiums, benefiting both them and the insurance company due to the lower risk. But what happens when the AI locates a hard-to-spot health problem and the insurer increases the premium or denies that person coverage altogether, in order to be able to deliver lower premiums to other customers or increase profit?

DISCUSSION

- What is the general health insurance regulation in Australia?

- What impact will AI made in Australia’s health insurance industries?

- Are there any ethical aspects we should look into in regards to using AI techniques in health insurance?

4.4 Explainable and interpretable AI

AI has demonstrated impressive practical success in many different application domains as we previously explored. However, the central problem of such models is that they are regarded as black-box models. Even if we understand the underlying mathematical principles of such models, they lack an explicit declarative knowledge representation, and hence will have difficulty in generating the underlying explanatory structures. This calls for AI systems that could make decisions transparent, understandable and explainable. In this week, we will explore the existing techniques for interpreting and explaining AI models and decisions.

4.5 From Explainable AI to Human-centered AI

As AI systems become ubiquitous in our lives, the human side of the equation needs careful investigation. The challenges of designing and evaluating “black-boxed” AI systems depends crucially on who the human is in the loop.

Watch the following TEDx talk from Prof. Andreas Holzinger explaining why a machine decision has been reached, paving the way towards explainable AI and Causality, ultimately fostering ethically responsible machine learning.

Explanations, mostly viewed as a form of post-hoc interpretability, can help establish rapport, confidence, and understanding between the AI system and its users, especially when it comes to understanding failures and unexpected AI behavior. Systematic human-subject studies are necessary to understand how different strategies for explanation generation affect end-users, especially non-AI-experts. Therefore, Explainability in AI is also a Human-Computer Interaction (HCI)’s problem.