Chapter 3 The AI Bias

Source from https://medium.com/thoughts-and-reflections/racial-bias-and-gender-bias-examples-in-ai-systems-7211e4c166a1

Automated decision-making systems enabled by AI can limit issues associated with human bias, but only if due care is focused on the data used by those systems and the ways they assess what is fair and safe. In this chapter, we are going to explore the issues of data bias and algorithm bias in AI, and the corresponding approaches and technologies towards these problems.

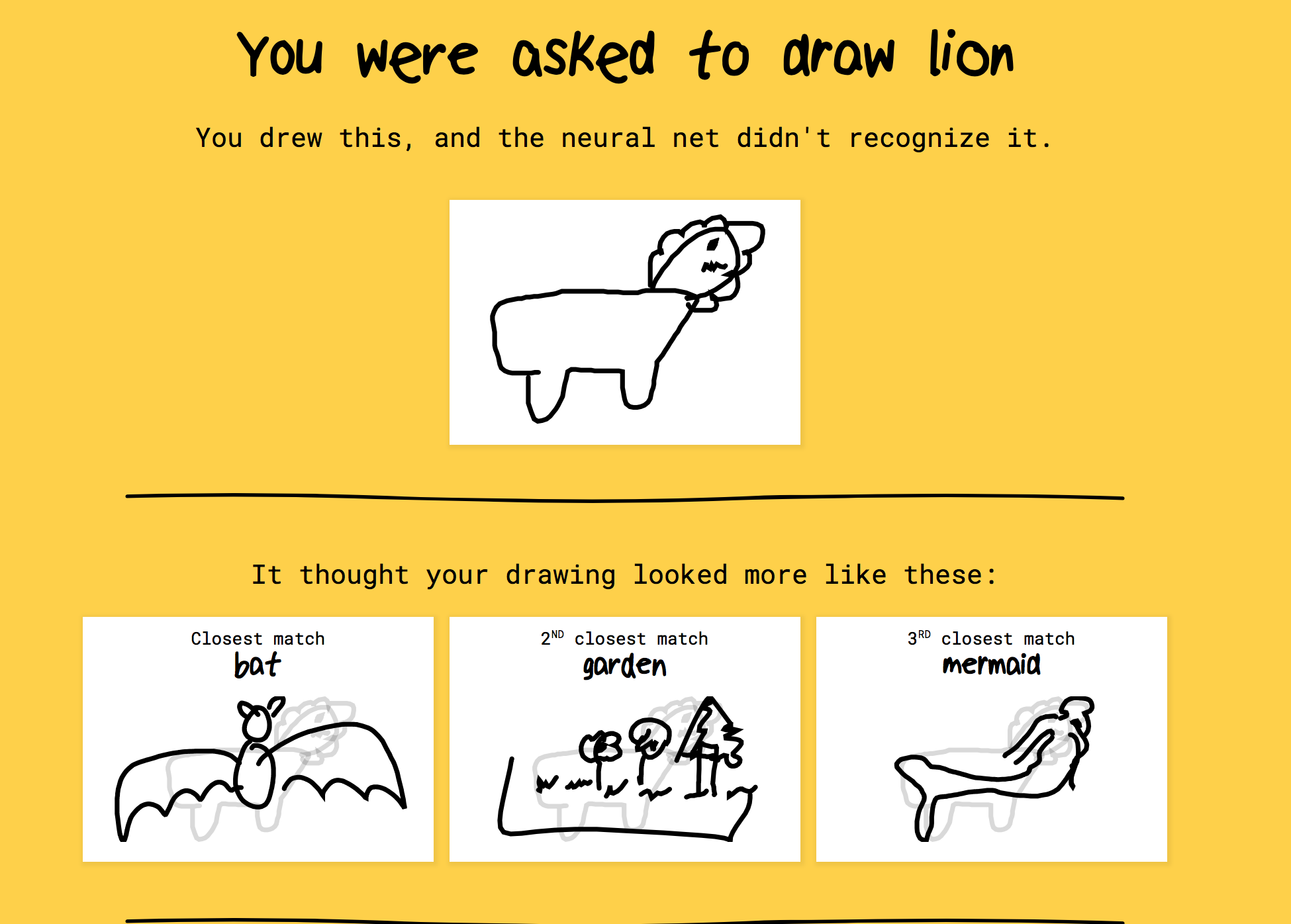

Google’s Quick Draw. Source from https://sites.psu.edu/kylebradleypassionblog/

Google’s recent “Quick, Draw!” experiment vividly demonstrates why addressing bias matters. Perhaps many of you participated in the game. In case you didn’t, the experiment invited internet users worldwide to participate in a fun game of drawing. In every round of the game, users were challenged to draw an object in under 20 seconds. The AI system would then try to guess what their drawing depicts. More than 20 million people from 100 nations participated in the game, resulting in over 2 billion diverse drawings of all sorts of objects, including cats, chairs, postcards, butterflies, skylines, etc.

When the researchers examined the drawings of shoes in the data-set, they realized that they were dealing with strong cultural bias.

Watch the following video from Google to explore the story, and understand a bit more why bias matters in AI systems.

3.1 Bias

Bias in data can exist in many shapes and forms, some of which can lead to unfairness in different downstream learning tasks. Following are some common categories of bias (bias?):

- Historical bias. Historical bias is the already existing bias and socio-technical issues in the world and can seep into from the data generation process even given a perfect sampling and feature selection.

- Representation Bias. Representation bias happens from the way we define and sample from a population feature selection.

- Measurement Bias. Measurement bias happens from the way we choose, utilize, and measure a particular feature.

- Evaluation Bias. Evaluation bias happens during model evaluation. This includes the use of inappropriate and disproportionate benchmarks for the evaluation of applications.

- Aggregation Bias. Aggregation bias happens when false conclusions are drawn for a subgroup based on observing other different subgroups or generally when false assumptions about a population affect the model’s outcome and definition.

- Population Bias. Population bias arises when statistics, demographics, representatives, and user characteristics are different in the user population represented in the dataset or platform from the original target population.

- Sampling Bias. Sampling bias arises due to the non-random sampling of subgroups. As a consequence of sampling bias, the trends estimated for one population may not generalize to data collected from a new population.

- Temporal Bias. Temporal bias arises from differences in populations and behaviors over time. Social Bias. Social bias happens when other people’s actions or content coming from them affect our judgment.

In this week’s workshop, we will explore in more detail the different types of bias with examples.

3.1.1 Case study: The Microsoft chatbot

The following case study is extracted from Chapter 3.4.1 (main?)

Tay: The Microsoft chatbot

Tay the Twitter chatbot was developed by Microsoft as a way to better understand how AI interacts with human users online. Tay was programmed to learn to communicate through interactions with Twitter users – in particular, its target audience was young American adults. However, the experiment only lasted 24 hours before Tay was taken offline for publishing extreme and offensive sexist and racist tweets.

The ability for Tay to learn from active real-time conversations on Twitter opened the chatbot up to misuse, as its ability to filter out bigoted and offensive data was not adequately developed. As a result, Tay processed, learned from and created responses reflective of the abusive content it encountered, supporting the adage, ‘garbage in, garbage out.’

Read the following article to investigate more on the story of Tay:

3.1.2 Case study: Amazon same-day delivery

The following case study is extracted from Chapter 3.4.2 (main?)

Amazon’s same-day delivery

Amazon recently rolled out same-day delivery across a select group of American cities. However, this service was only extended to neighborhoods with a high number of current Amazon users. As a result, predominantly non-white neighborhoods were largely excluded from the service.

Read the following article to investigate more on this story:

3.1.3 Case study: Facial recognition is accurate if you’re a white guy

Accuracy in datasets is rarely perfect and the varying levels of accuracy can in and of themselves produce unfair results—if an AI system makes more mistake for one racial group, as has been observed in facial recognition systems, that can constitute racial discrimination.

The following case study is extracted from https://medium.com/thoughts-and-reflections/racial-bias-and-gender-bias-examples-in-ai-systems-7211e4c166a1COMPAS

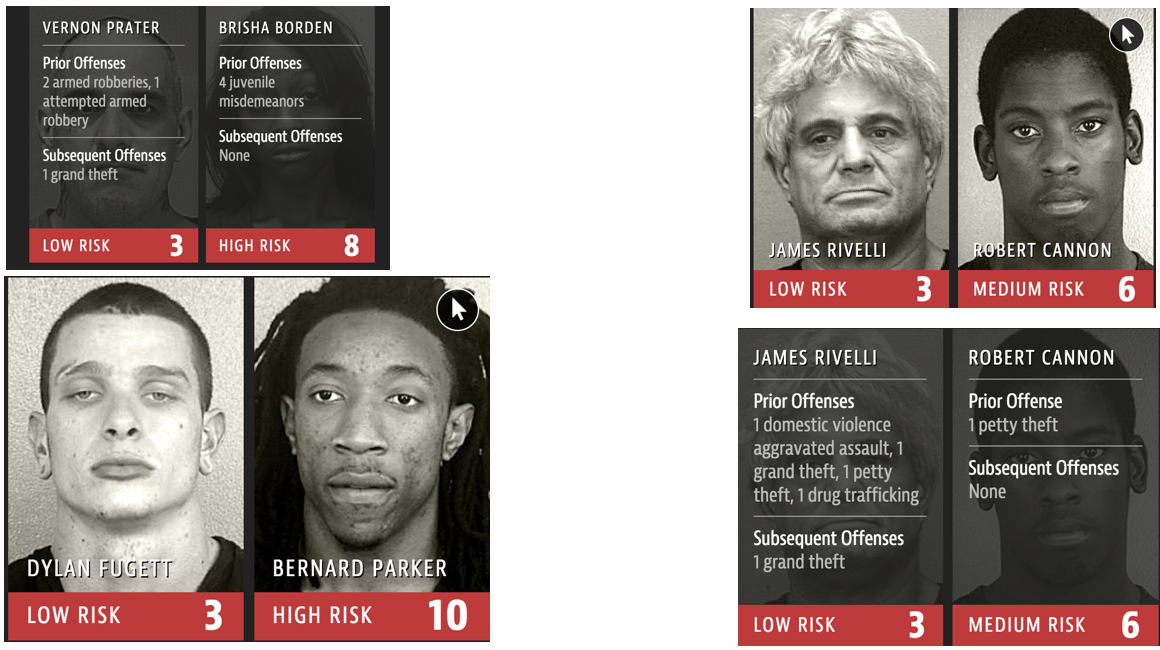

ProPublica, a nonprofit news organisation, had critically analysed risk assessment software powered by AI known as COMPAS. COMPAS has been used to forecast which criminals are most likely to re-offend. Guided by these risk assessments, judges in courtrooms throughout the United States would generate conclusions on the future of defendants and convicts, determining everything from bail amounts to sentences. The software estimates how likely a defendant is to re-offend based on his or her response to 137 survey questions.

ProPublica compared COMPAS’s risk assessments for 7,000 people arrested in a Florida county with how often they reoffended. It was discovered that the COMPAS algorithm was able to predict the particular tendency of a convicted criminal to re-offend. However, when the algorithm was wrong in its prediction, the results were displayed differently for black and white offenders. Through COMPAS, black offenders were seen almost twice as likely as white offenders to be labeled as a higher risk but not actually re-offend. While the COMPAS software produced the opposite results with whites offenders: they were identified to be labeled as a lower risk more likely than black offenders despite their criminal history displaying higher probabilities to re-offend. (examples of results are shown in the following figure).

Read the following article in the New York Times to investigate more on this story:

3.1.4 Case study: AI in healthcare and medicine

Around the world, AI is making all kinds of predictions about people, ranging from whether they will buy a particular product to potential health issues they might have.

AI can provide enormous benefits for healthcare and medicine.

However, it’s a challenging philosophical question with a wide range of viewpoints.

The heart of big data and AI is all about risk and probabilities, which humans struggle to accurately assess.

Read through the following article from nature to learn more about AI in healthcare and medicine, and the relevant ethical implications.

3.2 Algorithmic fairness

AI is not driven by human bias, however, it is programmed by humans. Therefore, AI systems can be susceptible to the biases of its programmers, or can end up making flawed judgments based on flawed information. Even when the information is not flawed, if the priorities of the system are not aligned with expectations of fairness, then the system can deliver negative impacts

3.2.1 Case study: The Amazon hiring tool

Automation has been key to Amazon’s e-commerce dominance, be it inside warehouses or driving pricing decisions. The company’s experimental hiring tool used AI to give job candidates scores ranging from one to five stars - much like shoppers rate products on Amazon.

The following case study is extracted from Chapter 5.3.1 (main?)The Amazon hiring tool

In 2014, a global e-commerce company Amazon began work on an automated resume selection tool. The goal was to have a system that could scan through large numbers of resumes, and determine the best candidates for positions, expressed as a rating between one and five stars. The tool was given information on job applicants over the preceding ten years. In 2015, the researchers realized that because of male dominance in the tech industry, the tool was now assigning higher ratings to men than women. This was not just a problem of there being larger numbers of qualified male applicants—the keyword “women” appearing in resumes resulted in them being downgraded. The tool was designed to look beyond the common keywords, such as particular programming languages, and focus on more subtle cues like the types of verbs used. Certain favored verbs like “executed” were more likely to appear on the resumes of male applicants. The technology not only unfairly advantaged male applicants but also put forward unqualified applicants. The hiring tool was scrapped, likely due to these problems.

Read the following article in the New York Times to investigate more on this story:

3.3 Dealing with bias and unfairness in AI

The above case studies demonstrate the need for critical assessments of bias and fairness in data inputs and the developed algorithms used to make decisions and create outputs.

Watch the following video for a general introduction to algorithmic bias and fairness AI systems.

In scientific research, strategies and methods have been developed to evaluate bias and fairness in AI models, as well as to optimize data inputs and sampling and reduce the impact of bias.

There are a large number of toolkits available to help evaluate fairness, AI Fairness 360 is one of the most comprehensive ones.

3.4 Thinking About Bias from Another Angle.

Guest Lecture: The Trouble with Bias - NIPS2017

Bias is a major issue in machine learning. But can we develop a system to “un-bias” the results? In this keynote at NIPS 2017, Kate Crawford argues that treating this as a technical problem means ignoring the underlying social problem, and has the potential to make things worse.

Speaker Bio: Kate Crawford is a leading researcher, academic and author who has spent the last decade studying the social implications of data systems, machine learning, and artificial intelligence. She is a Distinguished Research Professor at New York University, a Principal Researcher at Microsoft Research New York, and a Visiting Professor at the MIT Media Lab.